“Do you feel that Chat GPT understands you? Microsoft Copilot? To a certain degree, I do, yes… I did not expect this just three years ago, but it happened. But is it true understanding? I don’t know. “- Prof. Lingpeng Kong

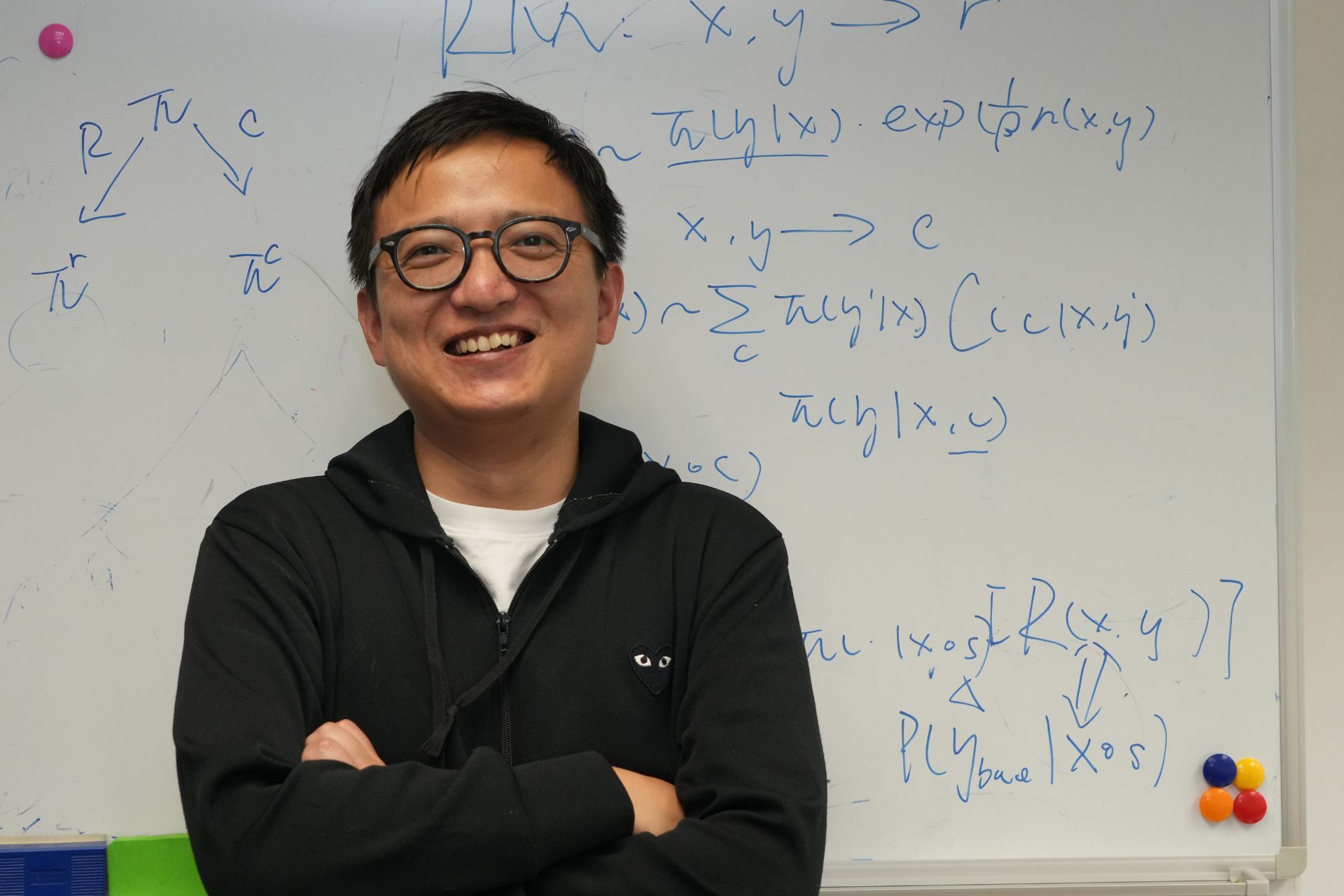

Professor Lingpeng Kong’s research at HKU’s Department of Computer Science focuses on natural language processing (NLP). Before joining HKU, Professor Kong worked at the AI research laboratory Google DeepMind in London.

❓ Dr Pavel Toropov: Your research profile says that you “tackle core problems in natural language processing by designing representation learning algorithms that exploit linguistic structures.” What does it mean, in simple terms?

💬 Professor Lingpeng Kong: We teach computers how to understand human language and speak like a human.

❓ And what is the main difficulty for a computer in doing that?

💬 The ambiguity of the human language. Humans have a lot of ambiguities in our speech, for example: “the man is looking at a woman with a telescope”. Does the woman have a telescope? Is the man using a telescope to look at the woman?

This is called proposition attachment problem. Modern language processing is built around the idea of statistical methods, but you always have a lot of boundary cases that you cannot fully and efficiently model.

❓ Humans figure out such boundary cases easily, from context. Why cannot computers do that?

💬 Because there is an exponentially large space to search. We must find efficient space within the boundaries of computation recourse and memory. That’s the difficult part, to build a statistical method to model that stuff.

Also, it is difficult with low resource languages. For example, Swahili – we don’t have enough data to train the system to work efficiently.

I think the good thing is that with current development of deep learning we can build models with large exponential value, and we can solve a lot of problems that in the past we could not imagine that we would be able to solve. That is why people are excited about AI.

You learn about things and you learn to generalise into things you have not thought before.

It is a matter of what model, what algorithm can generalise the best from less data, less computations. Nowadays we need very large data to train systems, basically the whole of the Internet.

❓ You also work on machine translation. The quality of machine translation seems very good now, much better than just a few years ago.

💬 I feel like the problems with machine translations have been solved! It has been developing very fast. Ten years ago there were translation ambiguities that you could not solve well, but today we have large language models.

Chat GPT translates really, really well! I think when it comes to technical documents and daily use email, it does better than me in Chinese to English translation. Nowadays, if I write an email in Chinese and translate to English, I only have to modify very, very few things.

❓ So will translators be replaced by AI?

💬 I think it is already happening now. Technology has advanced so far that some of the very difficult things in the past are not that difficult today.

Machine translation is just conditional language generation – for example, conditioning on the Chinese part to generate the English part and represent the same meaning. There are a lot of conditional generation problems like this – condition on your prompts to generate the next thing.

Everything is inside one model now, the big language model. Before, question answering has its own system, machine translation had its own system, so did creative writing… but now it is all the same system, it is only the prompt that is different.

❓ What prevents machines from understanding humans?

💬 Nothing, but here is always a philosophical debate about what the true understanding is.

Do you feel that Chat GPT understands you? Microsoft Copilot? To a certain degree, I do, yes… I did not expect this just three years ago, but it happened. But is it true understanding? I don’t know.

I like to do tests – I give song lyrics (to AI) and I ask: what does this mean? And it tells me, for example: sometimes times are hard, but things will be better. I still feel that it is not quite a human being talking to me, but maybe because I know that the result is coming from a lot of computation.

But if you do what is called Turing Test – differentiate between talking to Chat GPT and talking to a human being, then it is hard, really hard. I don’t think I can guess right more than 60 or 70% of the time.

❓ What allowed the AI to be able to communicate like that?

💬 We had never, in human history, trained a model of that size before. Before COVID, the largest language model had roughly 600 million parameters. Today, we have the model, the open source one, with 405 billion parameters. We never had the chance before to turn this quantity of data, such large amount of computation, into knowledge inside computers, and now we can.

❓ What is the current direction of your research?

💬 Our group works mainly on discovering new machine learning architecture. When you talk with Chat GPT, after about 4000 words, it forgets. The longer you talk to it, the more likely it is not to remember things. These are fundamental problems with machine learning architecture sites. This is one of the things we are trying to solve.

The machine learning model behind Chat GPT is called Transformer. It is a neural network. It can model sequences used everywhere, for example in the AI program called AlphaFold that works with proteins.

One direction of our work is – making Transformer better in terms of efficiency, in terms of modelling power, so that we can we have Transformer that works with ultra-long sequences and does not forget.

The second direction is pushing the boundary of reasoning limits of the current language models. I have a team working on problems from the International Mathematics Olympiad. We can now use large language models to solve those problems. It is doing really well.

👏 Thank you, Professor Kong!