Computer vision and artificial intelligence. To put it simply, computer vision means giving machines the capability to see. Humans can see the 3D world – the objects, the relationships between them, and a lot of semantics. Then we make decisions for our many activities in the 3D world.

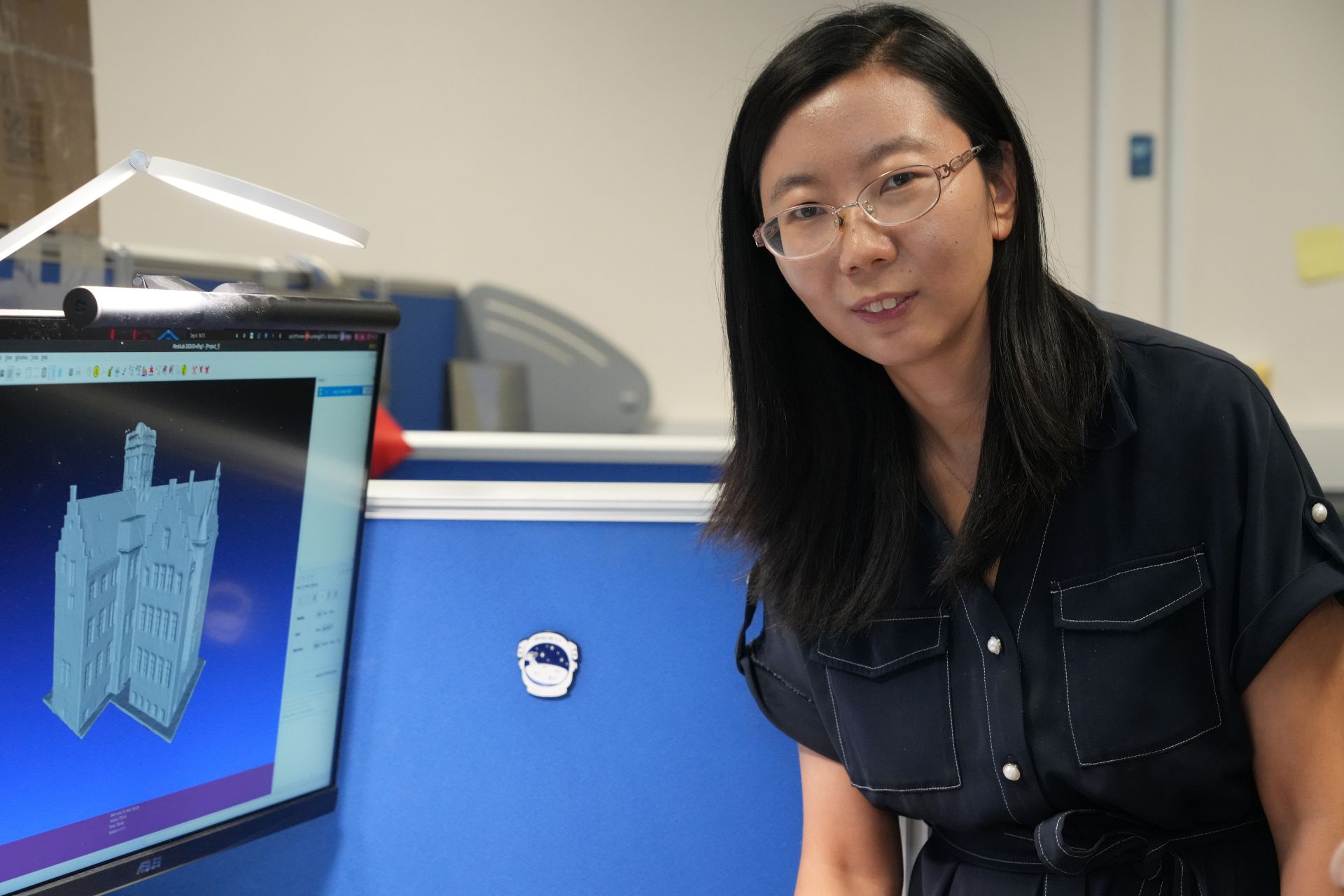

Professor Xiaojuan Qi works at the Department of Electrical and Electronic Engineering at HKU where she is a member of the Deep Vision Lab. Her work covers deep learning, computer vision and artificial intelligence. In this interview with our science editor, Dr Pavel Toropov, Professor Qi talks about self-driving cars and building virtual worlds.

❓ Dr Pavel Toropov: What is the main direction of your work?

💬 Professor Xiaojuan Qi: Computer vision and artificial intelligence. To put it simply, computer vision means giving machines the capability to see. Humans can see the 3D world – the objects, the relationships between them, and a lot of semantics. Then we make decisions for our many activities in the 3D world.

In order for a robot, a machine, to go around this world, it must also be able to see. It must recognise different objects, estimate their geometry. This has a lot of applications, one of which is self-driving cars. For a car to be able to drive automatically, it must have the ability to see what is in front of it, what obstacles there are, forecast the behaviour of other agents and make planning to drive safely.

An automated driving system has several parts. One part is about perception – how can a car get knowledge from the environment? Most of this knowledge is visual data from digital cameras and Lidar. Lidar is used to detect 3D objects, and, based on that data, the car can make decisions, like adjusting speed or turning. Our algorithm helps the machine better analyse this data, better understand what is happening around it.

Another application is medical. We develop AI to automatically analyse medical images to make more informed diagnoses, to make diagnoses more precise and reduce the potential possibility of mistreatment.

Another exciting area is AI for science. I am collaborating with the Department of Chemistry, we have developed an AI algorithm to improve the resolution of electron microscope images. This can help biologists make discoveries.

❓ Automated driving and AI are not new, what does your research contribute to this field? What are your strengths?

💬 In order to test if an automated car can drive safely, we need a simulation platform. What we are currently doing is building a simulation environment so that we can help train the models and evaluate whether the car can drive safely in a real environment. Do you know (the massively popular computer game) Wukong?

❓ Of course!

💬 The scenes in this game look very real, and the reason is that the developers used Lidar to scan objects, historical buildings especially, in Shanxi province (of China). They did a reconstruction of them and imported them into the virtual environment – the computer game.

This is very similar to what I am doing. Using such scans, but without relying on expensive Lidar scanning techniques, we reconstruct the world into virtual space, mostly using images shot with a digital camera. We create a completely new reality!

Another strength is that we are working to make the algorithm run on casually captured data. For example, in Wukong, they needed experts to scan objects and do reconstruction, but what we are doing allows anyone, not only experts, to use their phones to scan. Then we can make algorithms that can reconstruct the scenes.

❓ So you reconstruct, or build, a new reality, a virtual word, to train or test automatic cars and robots?

💬 Yes. We can use Lidar or digital camera scans of a room or a city and turn the real world into a digital space using algorithms. Besides, we also create models that can generate 3D objects, such as tables and chairs. And in this reconstructed or recreated digital world we can train our algorithm and test if it makes mistakes or not.

❓ What is the advantage of using the virtual world for training and evaluating algorithms?

💬 We can get data from interactions – for example a cleaning robot must move a table in the virtual world – and this can then be used to train agents – robots – to interact with the real, physical world. Training in the real world is expensive, and not safe – the robot can break objects, harm humans. But in the virtual world we can produce an infinite amount of data and interactions.

Besides, we can create what is called corner cases and improve safety. These are cases that happen very rarely in reality, but are critical – for example, two cars colliding. We can create these scenarios and let the car learn what to do.

❓ Have you partnered with anyone in the industry?

💬 We work with APAS (Hong Kong Automotive Platforms and Application Systems R&D Centre, set up by the Hong Kong SAR government), we have a collaborative project in automated driving. It’s a Hong Kong based company with branches in Mainland China. There is also (the car hailing app) Didi. And we have collaborations with Google, TenCent and ByteDance.

❓ What is the main difficulty machines have when trying to see the world?

💬 The variety and the diversity of data within the environment. For example, we are in this room, it is now bright, but when it is dark, or when the weather is different, this creates a lot of challenges for the model, for the machines, to recognise the same objects.

The (car) camera will capture different video points, under different lighting conditions, weather conditions… all these variations make this problem very complicated for machines, even though for humans it is very easy to interpret objects under different conditions.

So, in order for a machine to recognise an object properly we must include this object into its training data, and to be robust, the model must have a lot of training data to cover all the potential scenarios. If one is not covered, in the deployment stage there will be a lot of mistakes.

For example, in the US and Europe cars are different sizes. This also creates difficulties when developing 3D detection models. If the model is trained only on the data collected in the USA, and then you apply it in Europe, it may make mistakes. This is why companies have to develop foundation models, designed to be large in size and take large amounts of data, the assumption is that the data can cover the real world diversity. Chat GPT is a huge model with hundreds of billions of parameters. It is trained on the entire Internet data, but it also makes mistakes.

❓ Self-driving cars are already on the road in Mainland China, correct?

💬 Yes. Such cars are already on the road. Baidu has self-driving cars already in China. I am collaborating with Baidu. In the city of Wuhan, Baidu has a car service called LuoBo KuaiPao. There are no human drivers, but there is a human remote controller that can take over if a challenging scenario happens. One human controller can handle over 20 cars.

❓ When do you think self-driving cars will be as common as “normal” cars?

💬 It is coming. I think it will come in the next few years. The major issue is that humans cannot tolerate any mistakes from AI models. It is big news if a self-driving car makes a mistake, but humans also make mistakes. We need to accept that machines can make mistakes. Humans do, and they make a lot of mistakes! The issue is – how to make humans trust machines? We need human-machine collaboration.

👏 Thank you, Professor Qi.